With GCP

For detailed instructions on setting up your GCP bucket, refer to the Bucket Management documentation.

We can now start issuing problems to the TitanQ Solver. Below are some examples with expected results that can be run from your favorite IDE and Python environments locally.

Note that the following Python modules need to be installed:

- google-cloud-storage (for Google Cloud Bucket access)

- numpy (for data generation exchange)

Generate a weights matrix and a bias vector

Here are some example input weights matrix and bias vectors:

- N10

- N150

- K2000

import numpy as np

weights = np.array([[ 0, -1, -1, -1, 1, -1, 1, -1, -1, 1],

[-1, 0, 1, -1, -1, 1, -1, -1, -1, 1],

[-1, 1, 0, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, -1, 0, -1, 1, 1, -1, 1, -1],

[ 1, -1, 1, -1, 0, -1, -1, 1, -1, 1],

[-1, 1, -1, 1, -1, 0, -1, -1, 1, 1],

[ 1, -1, 1, 1, -1, -1, 0, 1, 1, 1],

[-1, -1, -1, -1, 1, -1, 1, 0, 1, 1],

[-1, -1, -1, 1, -1, 1, 1, 1, 0, 1],

[ 1, 1, -1, -1, 1, 1, 1, 1, 1, 0]], dtype=np.float32)

bias = np.zeros(len(weights), dtype=np.float32)

np.save('weights.npy', weights)

np.save('bias.npy', bias)

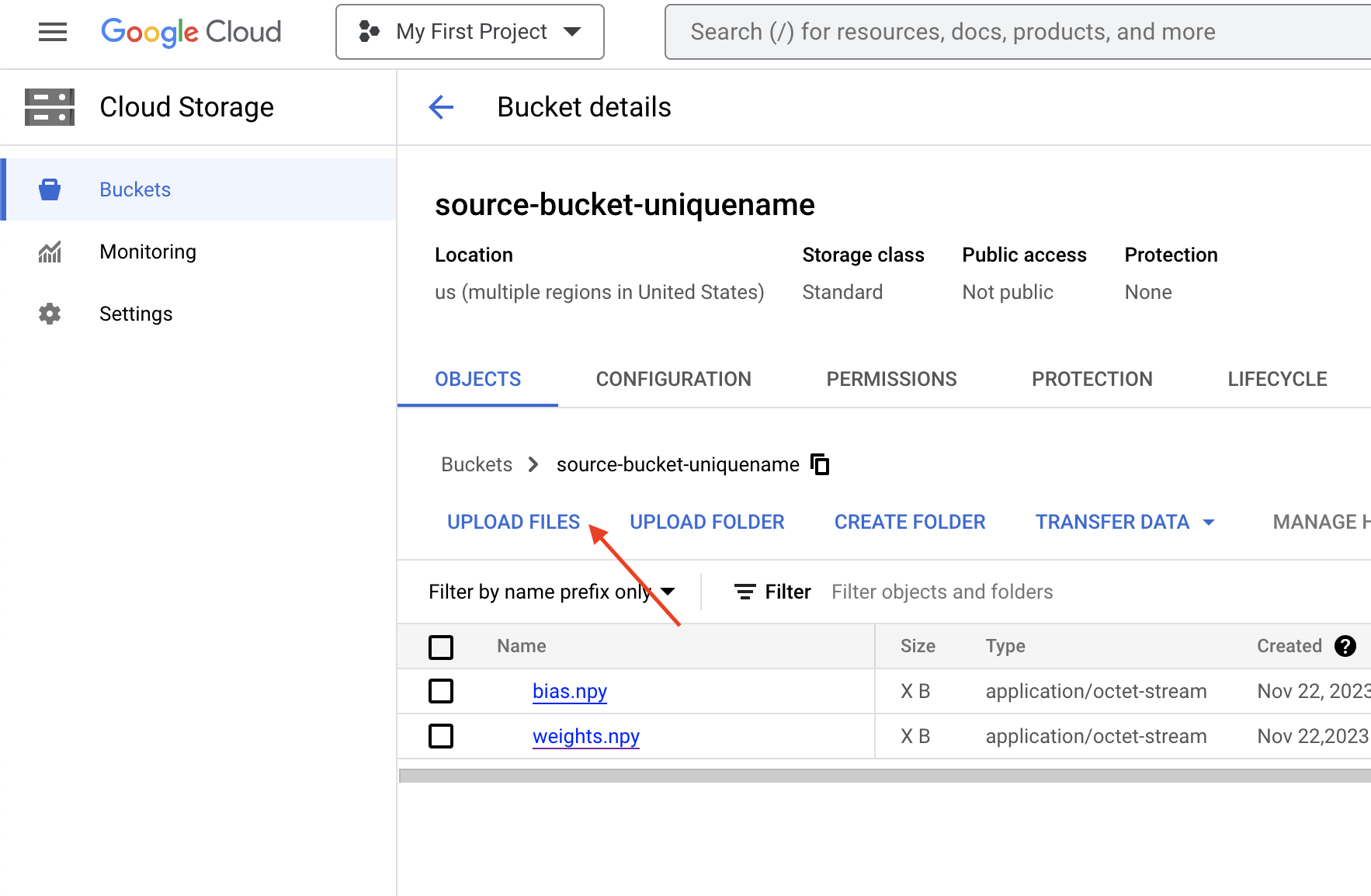

Upload the two files to the source bucket

From the Google Cloud Bucket dashboard, navigate to your source bucket, then select UPLOAD FILES:

or programmatically

- Python

- gcloud

from google.cloud import storage

from google.oauth2 import service_account

creds = service_account.Credentials.from_service_account_info(

# TODO insert your WRITE key here from the downloaded .json file

)

storage_client = storage.Client(credentials=creds)

bucket = storage_client.bucket("source-bucket-uniquename")

blob = bucket.blob("weights-file-path")

blob.upload_from_filename("YOUR_LOCAL_WEIGHTS_FILE.npy")

blob = bucket.blob("bias-file-path")

blob.upload_from_filename("YOUR_LOCAL_BIAS_FILE.npy")

Assuming the Google Cloud CLI is installed and the right project you're working on is set

User used to connect when initializing (gcloud init command) gcloud needs the right permissions to upload to the bucket

#!/bin/bash

gcloud storage cp YOUR_LOCAL_WEIGHTS_FILE.npy gs://SOURCE_BUCKET_NAME/weights.npy

gcloud storage cp YOUR_LOCAL_BIAS_FILE.npy gs://SOURCE_BUCKET_NAME/bias.npy

Issuing a solve request

Submitting a request to the TitanQ API involves preparing a JSON body and some HTTP Header parameters.

In the example below

-

make sure to fill in...

- your API key (

API_KEY) - your source bucket name (

SOURCE_BUCKET_NAME) - your destination bucket name (

DEST_BUCKET_NAME) - your project name (

PROJECT_NAME)

- your API key (

-

make sure to have these files

- READ key (.json) and WRITE key (.json) downloaded from Generating Keys

These problems were originally meant as bipolar problems. Here, we solve them as binary. To use them in the original bipolar-native setting, please use the bipolar-to-binary converter provided in the TitanQ SDK.

- N10

- N150

- K2000

import io

import json

import numpy as np

import requests

import time

import zipfile

from google.cloud import storage

from google.oauth2 import service_account

def main():

hdr = {

'content-type': 'application/json',

'authorization': 'API_KEY'

}

titanq_req_body = {

'input': {

'bias_file_name': 'bias.npy',

'weights_file_name': 'weights.npy',

'gcp': {

'bucket_name': 'SOURCE_BUCKET_NAME',

'json_key': {} # TODO insert your READ key here from the downloaded .json file

}

},

'output': {

'gcp': {

'bucket_name': 'DEST_BUCKET_NAME',

'json_key': {} # TODO insert your WRITE key here from the downloaded .json file

},

'result_archive_file_name': 'titanq/quickstart_result.zip'

},

'parameters': {

'beta': (1/(np.linspace(0.5, 100, 8, dtype=np.float32))).tolist(),

'coupling_mult': 0.5,

'num_chains': 8,

'num_engines': 4,

'timeout_in_secs': 0.1,

'variable_types': 'b'*10,

}

}

try:

resp = requests.post(

'https://titanq.infinityq.io/v1/solve',

headers=hdr,

data=json.dumps(titanq_req_body))

if 200 <= resp.status_code < 300:

# Parse the request's confirmation

titanq_response_body = json.loads(resp.content)

message = titanq_response_body['message']

status = titanq_response_body['status']

computation_id = titanq_response_body['computation_id']

# Wait for computation to complete.

# Guesstimate, assuming no delay in queue, etc.

time.sleep(titanq_req_body['parameters']['timeout_in_secs'] + 3)

creds = service_account.Credentials.from_service_account_info(

# TODO insert your READ key here from the downloaded .json file

)

storage_client = storage.Client(credentials=creds)

bucket = storage_client.bucket(titanq_req_body['output']['gcp']['bucket_name'])

blob = bucket.blob(titanq_req_body['output']['result_archive_file_name'])

buff = io.BytesIO(blob.download_as_bytes())

# unzip to the current directory

with zipfile.ZipFile(buff, 'r') as zip_file:

zip_file.extractall(".")

# Inspect results

with open('metrics.json', 'r') as metrics:

metrics = json.load(metrics)

print("-" * 15, "+", "-" * 22, sep="")

print("Ising energy | Expected ising energy")

print("-" * 15, "+", "-" * 22, sep="")

for ising_energy in metrics['ising_energy']:

print(f"{ising_energy: <14f} | -35")

else:

print('Request yielded HTTP {}'.format(resp.status))

except Exception as e:

print('Exception ', e)

if __name__ == '__main__':

main()

import io

import json

import numpy as np

import requests

import time

import zipfile

from google.cloud import storage

from google.oauth2 import service_account

def main():

hdr = {

'content-type': 'application/json',

'authorization': 'API_KEY'

}

titanq_req_body = {

'input': {

'bias_file_name': 'N150-bias.npy',

'weights_file_name': 'N150-weights.npy',

'gcp': {

'bucket_name': 'SOURCE_BUCKET_NAME',

'json_key': {} # TODO insert your READ key here from the downloaded .json file

}

},

'output': {

'gcp': {

'bucket_name': 'DEST_BUCKET_NAME',

'json_key': {} # TODO insert your WRITE key here from the downloaded .json file

},

'result_archive_file_name': 'titanq/quickstart_result.zip'

},

'parameters': {

'beta': (1/(np.linspace(0.5, 40, 16, dtype=np.float32))).tolist(),

'coupling_mult': 0.5,

'num_chains': 16,

'num_engines': 4,

'timeout_in_secs': 0.5,

'variable_types': 'b'*150,

}

}

try:

resp = requests.post(

'https://titanq.infinityq.io/v1/solve',

headers=hdr,

data=json.dumps(titanq_req_body))

if 200 <= resp.status_code < 300:

# Parse the request's confirmation

titanq_response_body = json.loads(resp.content)

message = titanq_response_body['message']

status = titanq_response_body['status']

computation_id = titanq_response_body['computation_id']

# Wait for computation to complete.

# Guesstimate, assuming no delay in queue, etc.

time.sleep(titanq_req_body['parameters']['timeout_in_secs'] + 3)

creds = service_account.Credentials.from_service_account_info(

# TODO insert your READ key here from the downloaded .json file

)

storage_client = storage.Client(credentials=creds)

bucket = storage_client.bucket(titanq_req_body['output']['gcp']['bucket_name'])

blob = bucket.blob(titanq_req_body['output']['result_archive_file_name'])

buff = io.BytesIO(blob.download_as_bytes())

# unzip to the current directory

with zipfile.ZipFile(buff, 'r') as zip_file:

zip_file.extractall(".")

# Inspect results

with open('metrics.json', 'r') as metrics:

metrics = json.load(metrics)

print("-" * 15, "+", "-" * 22, sep="")

print("Ising energy | Expected ising energy")

print("-" * 15, "+", "-" * 22, sep="")

for ising_energy in metrics['ising_energy']:

print(f"{ising_energy: <14f} | -2657")

else:

print('Request yielded HTTP {}'.format(resp.status))

except Exception as e:

print('Exception ', e)

if __name__ == '__main__':

main()

import io

import json

import numpy as np

import requests

import time

import zipfile

from google.cloud import storage

from google.oauth2 import service_account

def main():

hdr = {

'content-type': 'application/json',

'authorization': 'API_KEY'

}

titanq_req_body = {

'input': {

'bias_file_name': 'K2000-bias.npy',

'weights_file_name': 'K2000-weights.npy',

'gcp': {

'bucket_name': 'SOURCE_BUCKET_NAME',

'json_key': {} # TODO insert your READ key here from the downloaded .json file

}

},

'output': {

'gcp': {

'bucket_name': 'DEST_BUCKET_NAME',

'json_key': {} # TODO insert your WRITE key here from the downloaded .json file

},

'result_archive_file_name': 'titanq/quickstart_result.zip'

},

'parameters': {

'beta': (1/(np.linspace(2, 50, 32, dtype=np.float32))).tolist(),

'coupling_mult': 0.13,

'num_chains': 32,

'num_engines': 4,

'timeout_in_secs': 10.0,

'variable_types': 'b'*2000,

}

}

try:

resp = requests.post(

'https://titanq.infinityq.io/v1/solve',

headers=hdr,

data=json.dumps(titanq_req_body))

if 200 <= resp.status_code < 300:

# Parse the request's confirmation

titanq_response_body = json.loads(resp.content)

message = titanq_response_body['message']

status = titanq_response_body['status']

computation_id = titanq_response_body['computation_id']

# Wait for computation to complete.

# Guesstimate, assuming no delay in queue, etc.

time.sleep(titanq_req_body['parameters']['timeout_in_secs'] + 10)

creds = service_account.Credentials.from_service_account_info(

# TODO insert your READ key here from the downloaded .json file

)

storage_client = storage.Client(credentials=creds)

bucket = storage_client.bucket(titanq_req_body['output']['gcp']['bucket_name'])

blob = bucket.blob(titanq_req_body['output']['result_archive_file_name'])

buff = io.BytesIO(blob.download_as_bytes())

# unzip to the current directory

with zipfile.ZipFile(buff, 'r') as zip_file:

zip_file.extractall(".")

# Inspect results

with open('metrics.json', 'r') as metrics:

metrics = json.load(metrics)

print("-" * 15, "+", "-" * 22, sep="")

print("Ising energy | Expected ising energy")

print("-" * 15, "+", "-" * 22, sep="")

for ising_energy in metrics['ising_energy']:

print(f"{ising_energy: <14f} | -134388")

else:

print('Request yielded HTTP {}'.format(resp.status))

except Exception as e:

print('Exception ', e)

if __name__ == '__main__':

main()